In the ever-expanding world of robotics, one of the most complex and essential challenges is enabling AI robots to accurately recognize and interact with objects in cluttered, real-world settings. Unlike controlled lab environments, homes, warehouses, and outdoor areas present unpredictable lighting, occlusions, overlapping items, and chaotic spatial layouts.

But how do developers and researchers train AI-powered robots to navigate this visual chaos?

Let’s break down the multi-stage process, key technologies, data strategies, and real-world applications of training AI robots to perform robust object recognition in cluttered environments.

Why Cluttered Scene Recognition Matters

Whether it’s an AI robot for home cleaning your living room, or a robotic assistant helping seniors pick up a fallen item, being able to detect objects amid chaos is mission-critical.

Real environments are messy. Cups are placed behind books, toys are under couches, groceries are packed in mixed boxes. A robot trained only on isolated, clean object datasets will fail when it meets a real house.

Cluttered scene understanding is also foundational for robots in the following domains:

- Elder care: AI Robots for Seniors

- Child learning environments: AI Robots for Kids

- Emotionally aware systems: Emotional AI Robots

- Desktop assistant robots in chaotic offices: Desktop Robot Assistants

Step-by-Step Breakdown: How to Train AI Robots for Cluttered Object Detection

1. Curating a Clutter-Realistic Dataset

The first step is data. Robots learn what they see, and if what they see during training isn’t realistic, they will fail in cluttered environments.

Key Dataset Strategies:

- Simulate messy environments using randomized object placement

- Capture 3D scenes with RGB-D cameras (color + depth)

- Include occlusions (objects partly hidden)

- Use domain randomization in simulations to train for real-world chaos

- Capture data from real homes, kids’ playrooms, office desks, garages

Some popular datasets used for this purpose include:

- YCB Video Dataset

- ObjectNet3D

- SceneNet RGB-D

- AI2-THOR (Simulated indoor scenes)

You can also use your own image generation pipeline using tools like Unity3D or Blender to generate thousands of cluttered scenes synthetically.

2. Object Annotation & Segmentation

Once you have your dataset, you need to label each object – and not just with bounding boxes, but with pixel-level segmentation. This allows robots to learn not just where objects are, but what their boundaries are, even when overlapping.

Annotation Types:

- Semantic segmentation: Label all pixels of a specific object class

- Instance segmentation: Label individual instances even if same category

- 3D bounding box annotation for depth-aware object understanding

Tools:

- Labelbox

- VGG Image Annotator (VIA)

- Supervisely

- Blender + Python scripts

3. Choosing the Right Neural Network Architecture

For AI robots, your object recognition model must be compact (low-latency), real-time, and capable of spatial reasoning. A few options include:

Convolutional Neural Networks (CNNs):

- Faster R-CNN: High accuracy but slower

- YOLOv8: Fast and highly customizable

- EfficientDet: Balanced performance

Transformer-Based Vision Models:

- DETR (DEtection TRansformer): Excellent with cluttered objects

- Swin Transformer: Strong with hierarchical attention on parts of a scene

Depth-Aware Models:

- Combine RGB + Depth (RGB-D) using:

- FuseNet

- 3D U-Net

4. Sim-to-Real Transfer Learning

Robots are often trained in simulation, but the real world is more complex. This gap is handled with sim-to-real training.

Strategies:

- Domain Randomization: Randomize lighting, color, noise, object textures in simulation

- Fine-Tuning with Real Data: Transfer a model from simulation to real camera feeds

- Adversarial Training: Use GANs to make synthetic data indistinguishable from real-world inputs

5. Incorporating Spatial Attention

In cluttered scenes, it’s not enough to detect objects—you need to focus attention.

Attention-based AI models dynamically highlight parts of an image that are most relevant. This helps avoid confusion when objects overlap.

Examples:

- Self-Attention layers in Transformers

- Visual saliency prediction

- Spatial reasoning modules in multi-object detection networks

6. Multimodal Input for Contextual Reasoning

Clutter isn’t just visual—it’s cognitive. A toy next to a plate isn’t likely food.

To help AI robots reason better:

- Combine vision with natural language (e.g., “Find the red cup next to the book”)

- Use audio cues (e.g., if a phone rings, localize it)

- Object affordance models: Knowing what an object can do helps identify it

7. Physical Interaction Feedback

AI robots don’t just look—they touch.

Using feedback from physical interaction (via robotic arms or sensors), robots can:

- Confirm object presence

- Re-orient items for better visibility

- Eliminate false positives by trying to grasp the object

This loop of perception–action–feedback is key to accurate recognition in clutter.

Real-World Use Cases of AI Robots with Object Recognition

In Homes: Smart AI Companions

AI home robots like those featured in our AI Robots for Home category use clutter-aware recognition to:

- Find dropped items

- Fetch specific objects (“Get my glasses on the table”)

- Clean around obstructions

In Classrooms: Educational Robots

Cluttered desks are no issue for AI Robots for Kids, which learn:

- To identify educational materials like books, pencils

- To distinguish between tools and toys

- To visually assist children in problem-solving tasks

For Seniors: Assistive AI

For AI Robots for Seniors, object recognition aids in:

- Medication detection and reminder

- Fall-detection scenarios

- Navigating through clutter safely

Common Challenges in Cluttered Object Recognition

- Occlusion: When one object hides part of another

- Similar-looking objects: (e.g., white cup vs white bowl)

- Real-time performance: Keeping models light for on-device inference

- Lighting variation: Shadows, glare, night conditions

- Dynamic environments: Constantly changing object locations

Emerging Trends for 2025 and Beyond

- Neural Radiance Fields (NeRFs): Reconstructing entire 3D scenes from a few images

- Open-Vocabulary Object Detection: Recognize objects without ever being explicitly trained on them

- Interactive AI Companions: Combine emotion, language, and object recognition (See More)

- Privacy-Aware Recognition: Detect objects without storing images or compromising privacy

FAQs – AI Robots in Cluttered Scenes

Q1: What are the best robot models trained for cluttered environments?

A1: Currently, AI-powered robots using a combination of YOLOv8 + Swin Transformers + RGB-D depth fusion show the most promise.

Q2: Do educational AI robots work well in messy classrooms?

A2: Yes! Our Top AI Robots for Kids are designed to handle dynamic, messy environments with advanced object segmentation.

Q3: Can AI robots help locate lost items?

A3: Many domestic and personal robots now come with object search functionalities that use real-time scene segmentation and learned object models to identify misplaced items.

Q4: Is vision alone enough for robots to recognize objects?

A4: Vision is the primary input, but best-performing systems use multimodal fusion (vision + audio + tactile data) for higher reliability.

Training AI Robots to Recognize Objects in Cluttered Environments: Deep Dive into Real-World Perception

Why Object Recognition in Cluttered Environments Matters

Unlike the pristine lab conditions many AI robots are tested in, real-world environments—especially homes, offices, or hospitals—are full of unpredictable obstacles. Books stacked on couches, toys under the table, bags draped on chairs, overlapping tools on workbenches—the real world is chaotic.

AI robots must not only see but understand this chaos to be useful.

For example:

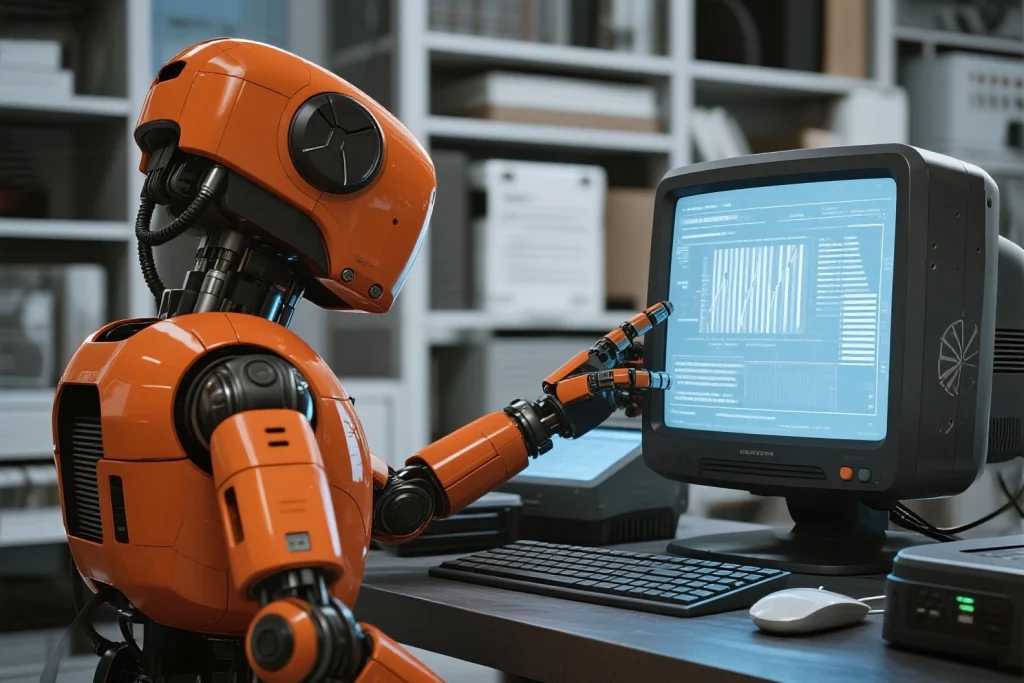

- A desktop robot assistant must find your coffee mug hidden behind your monitor.

- A home robot vacuum needs to distinguish between a sock and a cable.

- An emotional AI companion robot needs to navigate your room without tripping over shoes or confusing a blanket for a pet.

Training AI robots for cluttered scenes isn’t just a technical hurdle—it’s the key to making them truly intelligent and applicable in everyday human life.

Explore more related categories on AI Robots for Home and Desktop Robot Assistants.

Core Challenges in Cluttered Object Recognition

Before diving into the solutions, we must understand the main challenges AI robots face:

1. Occlusion

Objects often overlap or block each other partially—something simple for a human brain to parse, but difficult for AI.

2. Lighting and Shadows

Poor lighting, reflective surfaces, or unusual shadow angles can distort object boundaries.

3. Background Complexity

Complex or patterned backgrounds make object edges difficult to define.

4. Sensor Noise

Inaccuracies from cameras or depth sensors introduce uncertainty in perception.

5. Object Variety

One class (e.g., “mug”) may appear in dozens of colors, sizes, and textures.

Step-by-Step Guide: How AI Robots Are Trained to Handle Cluttered Scenes

Below is a comprehensive technical roadmap that real-world roboticists and AI engineers follow to tackle this challenge.

Step 1: Data Collection in Messy Environments

Approach:

- Collect thousands of images or videos from real homes, garages, kitchens, and offices.

- Use 3D scanning (LiDAR, RGB-D cameras) to map physical space accurately.

Tools & Platforms:

- LabelFusion, ScanNet, and YCB Video Dataset offer real-world cluttered scene datasets.

Key Concept:

Labelled data from real-world clutter allows deep learning models to understand how occlusion, overlap, and chaos behave.

Explore our reviews of AI robots that benefit from this on AI Robot Reviews.

Step 2: Synthetic Data Augmentation

Since real-world data is expensive to collect and annotate, researchers also generate synthetic cluttered scenes.

Methods:

- Use simulation environments like Unity3D or NVIDIA Isaac Gym.

- Randomly place 3D models of objects in synthetic rooms with messy layouts.

- Train AI on millions of these scenes to simulate infinite clutter combinations.

Advantage:

- Easier annotation

- Control over lighting, angles, and occlusion

- Cheaper and scalable

Step 3: Deep Learning for Visual Perception

Once datasets are ready, AI models are trained using deep learning architectures. The most common ones include:

- YOLOv8 / YOLOv9 – Real-time object detection

- Mask R-CNN – Instance segmentation for cluttered object masks

- DETR / DINO – Transformer-based object detection

- SAM (Segment Anything Model) – For zero-shot segmentation

Training Strategy:

- Train the model to detect and classify multiple overlapping objects.

- Teach it to “segment” each object’s boundary.

- Fine-tune on domain-specific cluttered scenes (e.g., kitchen, bedroom, lab).

Step 4: Multi-Sensor Fusion

Vision alone isn’t always enough. AI robots often combine multiple sensors to “see” through clutter.

Fusion Techniques:

- RGB + Depth Cameras

- LiDAR + Cameras

- Tactile sensors + Vision (for object grasping in clutter)

Why Fusion Matters:

It allows robots to “feel” and “see” simultaneously—helping them differentiate between a pillow and a pet or a paper and a plate.

Some of the best AI Robots for Seniors use these strategies to navigate homes safely.

Step 5: 3D Scene Reconstruction

Using SLAM (Simultaneous Localization and Mapping) and neural radiance fields (NeRF), AI robots can build 3D maps of their environment.

Use Case:

- A home robot reconstructs your room and stores object positions in memory.

- It predicts where your keys might be even if partially visible.

Step 6: Object Affordance Prediction

Beyond recognition, robots are trained to understand what they can do with an object.

Example:

A mug is not just a “cylinder”—it’s a graspable, fillable, drinkable object.

This is where techniques like affordance learning come in, teaching robots not just to recognize but also interact.

Step 7: Active Learning with Human Feedback

Sometimes robots make mistakes. A human can point and say, “No, that’s a toy, not trash.”

Active Learning Loop:

- Robot proposes a label → Human gives feedback → Model adjusts its understanding.

- Over time, robot builds a better understanding of your specific cluttered space.

Explore interactive robots that implement this feedback loop on Interactive AI Companions for Adults.

Emerging Technologies Powering This Evolution

Let’s briefly explore some cutting-edge methods enabling object recognition in clutter:

1. Diffusion Models + Vision

Used for denoising clutter and reconstructing occluded items.

2. NeRFs for View Synthesis

Robots can “imagine” what the other side of an object might look like.

3. Large Vision-Language Models (VLMs)

Like GPT-4V or Flamingo, they can reason about scenes with visual cues and language prompts like “Find the blue bottle under the table.”

Real-World Example: EMO & Miko Robots

Many emotional and educational AI robots are integrating clutter-tolerant vision systems.

- EMO (see in-depth review) – Learns to avoid desk clutter and still maintain interaction.

- Miko 3 – Designed to operate even when kids leave toys everywhere.

These robots combine learning-based visual perception with real-time scene adaptation.

Key Takeaways: Building Smarter Perception in AI Robots

| Feature | Why It Matters |

|---|---|

| Deep Learning Vision | Fast, real-time clutter detection |

| Sensor Fusion | Understands beyond visuals |

| 3D Mapping | Remembers where things are |

| Affordance Learning | Enables intelligent interaction |

| Feedback Loops | Adapts to human preferences |

FAQ Section (Boost SEO & SERP Coverage)

Q: Can AI robots recognize partially hidden objects?

Yes. Using deep learning and 3D mapping, modern AI robots can identify occluded items with high accuracy.

Q: How do AI robots avoid mistaking one object for another in clutter?

By combining RGB, depth, tactile data, and learning from contextual cues.

Q: What training data is used for cluttered object detection?

Datasets like YCB Video, SceneNet RGB-D, and custom home-scan datasets are popular.

Q: Which robots are best at handling messy environments?

AI robots featured in AI Robots for Home and Emotional AI Robots categories tend to have more robust perception systems.

Related Pages to Explore

- Emotional AI Robots for Mental Health

- Top Emotional Robots for Kids

- Affordable Robot Gift Ideas

- Top Robot Gifts for Seniors

All trademarks, product names, and brand logos belong to their respective owners. didiar.com is an independent platform providing reviews, comparisons, and recommendations. We are not affiliated with or endorsed by any of these brands, and we do not handle product sales or fulfillment.

Some content on didiar.com may be sponsored or created in partnership with brands. Sponsored content is clearly labeled as such to distinguish it from our independent reviews and recommendations.

For more details, see our Terms and Conditions.

:AI Robot Tech Hub » How to Train AI Robots to Identify Objects in Cluttered Environments

AI Robot Tech Hub

AI Robot Tech Hub